The potential of artificial clouds

What if?

Consider the scenario of a panel discussion or a question-and-answer session featuring a group of experts in the relevant field. While these participants possess significant knowledge, they may also exhibit a degree of bias due to their established perspectives. Introducing an artificial intelligence (AI) as one of the participants could provide a refreshing dynamic. This AI could be represented on stage through an animated avatar, allowing for direct voice interaction with the human experts in real time. Powered by generative AI technology, this virtual participant could offer alternative viewpoints and insights, enriching the discussion with a broader range of knowledge.

How can we put such a concept into practice?

In September 2024, colleagues from msg's Public Sector division, along with the msg Artificial Intelligence cross-divisional unit, successfully implemented this scenario for the Nordl@nder Digital conference. They skillfully integrated three cloud-based AI services into the prototype application Dr. A.I. Futura, which was developed from an existing technology demonstration by HeyGen, a firm renowned for its expertise in artificial avatars:

- The productive OpenAI Whisper AI cloud service was employed to implement the speech-to-text component, which involves converting the spoken words of the human conference participants into written text for ChatGPT.

- The productive OpenAI GPT AI cloud service was employed to develop the text-to-text or chat component, which involves converting textual inquiries into textual responses, similar to the functionality provided by ChatGPT.

- Finally, the experimental HeyGen Interactive Avatar AI cloud service was employed to facilitate the subsequent text-to-speech component, which involves converting ChatGPT's written responses into natural language audio and visualizing them through a lip-synchronized avatar in video format.

How can we integrate this into an event?

The positive reception of this solution during the panel discussion at the public conference naturally prompted us to seek the implementation of the same feature for our events in the msg film studio. However, the critical element in studio production is the smooth and reliable integration of such software components. Due to the limited success of the integration with the current prototype, we initiated the development of a new application named Studio AI at the beginning of October 2024, which is also offered as open source software.

Screenshot of Studio AI, with the left side showcasing control mode in Google Chrome and the right side illustrating render mode in OBS Studio.

The application’s name indicates its primary emphasis on the smooth integration of artificial intelligence within a studio setting. This allows an operator to engage flexibly, particularly regarding the input side (speech-to-text), while on the output side (text-to-speech), the resulting avatar can be accessed and utilized efficiently, facilitating its reintegration into the production process.

At the same time, the core speech-to-text component of this application was implemented utilizing the outstanding AI cloud service Deepgram, which facilitates reduced latency and enhanced quality. Now you can even speak in the Bavarian dialect: “Servus, sog amoi: wen mir in Minga von da Wiesn redn, wos moana mir da eigentlich?” (translated into High German as: “Hallo, sag Mal: wenn wir in München von der Wiese sprechen, was meinen wir da eigentlich?” (In English “Hello, tell me: when we speak about the meadow in Munich, what do we actually mean?”). And then the downstream text-to-text component also has a real chance of responding with “Oktoberfest”.

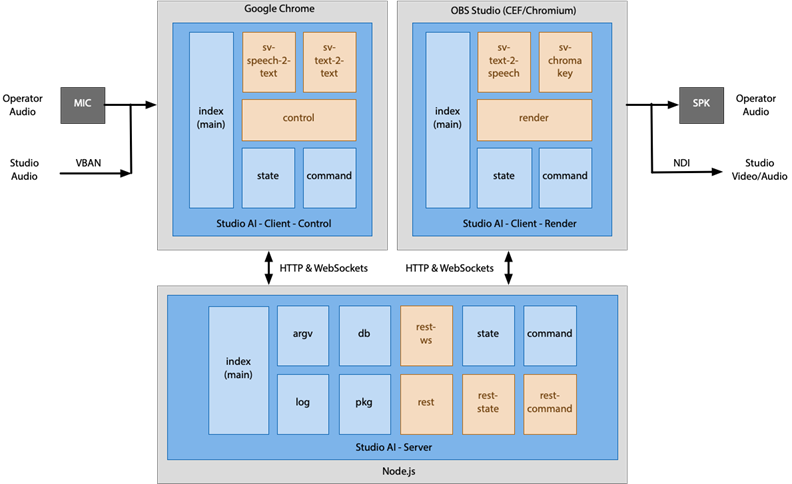

The diagram presented below illustrates the architecture of Studio AI, which is designed as a client/server model. In this configuration, the client is initiated twice, each time operating in a distinct mode. In control mode (refer to the screenshot above on the left), the operator has the capability to meticulously configure all parameters of the three AI cloud services using a standard web browser, and subsequently manage these three services during runtime. In render mode (refer to the screenshot above on the right), the avatar appears in a video mixing application like OBS Studio in a separate format, where it is picked up for further processing.

Architecture (functional view) of Studio AI

What does the result look like?

Now let's take a look at a short demonstration in which I engaged in a minor and trivial conversation with an AI developed by Studio AI in the msg film studio. Despite the occasional latency of approximately four seconds, this AI avatar integrates remarkably well into the production, as you can clearly see in the video. The operator's control mode client is presented in real time at the bottom left of this demonstration, so that you can see how the avatar is managed in the background.

The operator's main responsibility in this context is to transmit the speaker's audio to the speech-to-text interface (displayed in the top left of the screensharing) solely when the AI is being addressed. In all other instances, the text-to-text component (illustrated on the right side of the screensharing) and the following text-to-speech component (displayed in the bottom left of the screensharing) operate in their designated automatic modes.

What insights can we gain from this?

We have encountered a range of experiences during the development of the prototype and the Studio AI application:

- Orchestration: In certain instances, a singular AI service may not suffice. Rather, it may be necessary to integrate multiple services at the API level and coordinate their functions during runtime. In this particular scenario, there were three distinct services involved.

- Creativity: A certain degree of creative integration is typically necessary when working with AI in practical applications. It is also essential to be aware of the available services in the market, their quality, and the integration options that they offer.

- Cloud: Many AI services can indeed be operated locally with considerable effort (such as Ollama, among others); however, leveraging established cloud services is significantly more productive and efficient. Enhanced productivity is achieved as you can arrive at an executable solution more swiftly, thereby improving time-to-market. Additionally, efficiency is increased since local IT resources may often fall short of meeting the substantial resource demands of generative AI. Even when these resources are adequate, they frequently result in significant resource wastage, as such solutions are typically not fully utilized over their entire runtime.

- Effort: The development of the Studio AI application required approximately 250 hours of work, as per industrial standards, and encompasses a total of 4,600 lines of code (LoC). This experience demonstrates that the effort needed for implementing this type of AI cloud integration solution is relatively feasible in practice. Nonetheless, it is important to highlight that the indicated working hours assume a strong familiarity with web technologies, cloud computing, artificial intelligence, and multimedia.

- Data protection: When utilizing cloud services, it is essential to consider both GDPR compliance and order processing requirements. Additionally, given that these services involve artificial intelligence, the EU AI Act is also relevant. It is essential, in the context of a particular production, to ensure that only designated language components are input into the AI. Additionally, it is crucial that individuals involved have provided their explicit consent beforehand, that no personal data is retained in any form following processing, and that no confidential information is transmitted through the solution.

- Gimmick: Finally, it is important to acknowledge that this type of AI may be perceived merely as a nice little gimmick. But not everything in life has to be dry and boring for it to gain acceptance. To foster genuine enthusiasm for AI, we must recognize the significant value in the enjoyment that such solutions can provide.

Conclusion

The example of the Studio AI application demonstrates the remarkable capabilities that generative AI and cloud computing offer us at present. I envision a future where conventional business information systems can leverage these AI cloud services to an even greater extent. This could potentially include the integration of a genuine voice interface of this kind.